Part 9 - Apstra Blueprints in Terraform

![]()

Create blueprints in Terraform.

This step uses a Terraform resource to create an Apstra Blueprint, and uses a handful of other Terraform resources to set up configurations within the new Blueprint.

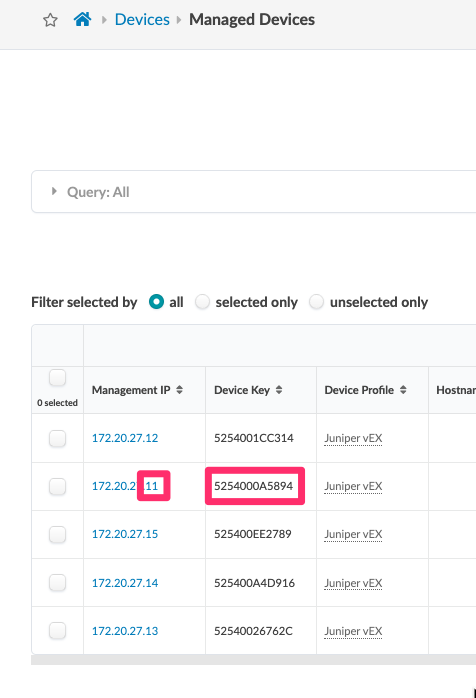

When assigning virtual Juniper switches to roles in our virtual datacenter fabric we must be able to unambiguously identify each switch using its serial number (Apstra Device Key).

Use the Devices → Managed Devices screen to copy the values for the device key to the locals section of the blueprints.tf file.

| You may want to refer to the following page in the step by step GUI based lab guide for reference: Lab Guide 1 - Juniper |

Create a blueprint.tf file

-

In your working directory create a file called

blueprints.tfand we will start by defining a local variable which identifies the device keys and names, while also keeping track of the IP address assigned to the device in the comments. -

Identify the data associated with the locals.switches information. Note the IP addresses have a prefix followed by a suffix. The Suffix is the information that identifies which switch does what job. For instance, we can see the below information identifies the device with the suffix of .11 as the switch id of

spine1.

-

Copy the Device Key value into the area of the

blueprints.tffile next to the matching commented IP address suffix. -

Paste the rest of the Terraform data into the

blueprints.tfto finish construction of the file and save your work.locals { switches = { spine1 = "5254000A5894" // <ip_prefix>.11 spine2 = "5254001CC314" // <ip_prefix>.12 apstra_esi_001_leaf1 = "52540026762C" // <ip_prefix>.13 apstra_esi_001_leaf2 = "525400EE2789" // <ip_prefix>.15 apstra_single_001_leaf1 = "525400A4D916" // <ip_prefix>.14 } } # https://cloudlabs.apstra.com/labguide/Cloudlabs/4.1.2/lab1-junos/lab1-junos-6_blueprints_.html # Instantiate a blueprint from the previously-created template resource "apstra_datacenter_blueprint" "lab_guide" { name = "apstra-pod1" template_id = apstra_template_rack_based.lab_guide.id } # Assign previously-created ASN resource pools to roles in the fabric locals { asn_roles = toset(["spine_asns", "leaf_asns"]) } resource "apstra_datacenter_resource_pool_allocation" "lab_guide_asn" { for_each = local.asn_roles blueprint_id = apstra_datacenter_blueprint.lab_guide.id role = each.key pool_ids = [apstra_asn_pool.lab_guide.id] } # Assign previously-created IPv4 resource pools to roles in the fabric locals { ipv4_roles = toset(["spine_loopback_ips", "leaf_loopback_ips", "spine_leaf_link_ips"]) } resource "apstra_datacenter_resource_pool_allocation" "lab_guide_ipv4" { for_each = local.ipv4_roles blueprint_id = apstra_datacenter_blueprint.lab_guide.id role = each.key pool_ids = [apstra_ipv4_pool.lab_guide.id] } # Discover details (we need the ID) of an interface map using the name supplied # in the lab guide. data "apstra_interface_map" "lab_guide" { name = "Juniper_vEX__slicer-7x10-1" } # Assign interface map and system IDs using the map we created earlier resource "apstra_datacenter_device_allocation" "lab_guide" { for_each = local.switches blueprint_id = apstra_datacenter_blueprint.lab_guide.id interface_map_id = data.apstra_interface_map.lab_guide.id node_name = each.key device_key = each.value deploy_mode = "deploy" } # Deploy the blueprint. resource "apstra_blueprint_deployment" "lab_guide" { blueprint_id = apstra_datacenter_blueprint.lab_guide.id comment = "Deployment by Terraform {{.TerraformVersion}}, Apstra provider {{.ProviderVersion}}, User $USER." depends_on = [ # Lots of terraform happens in parallel -- this section forces deployment # to wait until resources which modify the blueprint are complete. apstra_datacenter_device_allocation.lab_guide, apstra_datacenter_resource_pool_allocation.lab_guide_asn, apstra_datacenter_resource_pool_allocation.lab_guide_ipv4, ] }

Review what Blueprints will be created

You probably already know next we will run terraform apply to see what tasks Terraform will be doing to instantiate the blueprint in Apstra.

-

Run

terraform applyand review the output before sayingyesto the interactive prompt.bwester@bwester-mbp playground % terraform apply data.apstra_logical_device.lab_guide_servers["dual_homed"]: Reading... data.apstra_logical_device.lab_guide_switch: Reading... data.apstra_interface_map.lab_guide: Reading... data.apstra_logical_device.lab_guide_servers["single_homed"]: Reading... apstra_ipv4_pool.lab_guide: Refreshing state... [id=3a4c81a3-cb30-4d93-bc51-cedce7f18e98] apstra_asn_pool.lab_guide: Refreshing state... [id=81c425e5-687d-41cb-9db4-66b5b2c85463] data.apstra_logical_device.lab_guide_switch: Read complete after 1s [id=virtual-7x10-1] data.apstra_logical_device.lab_guide_servers["dual_homed"]: Read complete after 1s [id=AOS-2x10-1] data.apstra_logical_device.lab_guide_servers["single_homed"]: Read complete after 1s [id=AOS-1x10-1] apstra_rack_type.lab_guide_single: Refreshing state... [id=lin0v54sspm-5u7o5tr7sq] apstra_rack_type.lab_guide_esi: Refreshing state... [id=a8nn0wl4rcgxo0fby0eb5q] apstra_template_rack_based.lab_guide: Refreshing state... [id=283b23e1-feb5-4f09-8f93-5a2f716ed1ea] data.apstra_interface_map.lab_guide: Read complete after 2s [id=Juniper_vEX__slicer-7x10-1] Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols: + create Terraform will perform the following actions: # apstra_blueprint_deployment.lab_guide will be created + resource "apstra_blueprint_deployment" "lab_guide" { + blueprint_id = (known after apply) + comment = "Deployment by Terraform {{.TerraformVersion}}, Apstra provider {{.ProviderVersion}}, User $USER." + has_uncommitted_changes = (known after apply) + revision_active = (known after apply) + revision_staged = (known after apply) } # apstra_datacenter_blueprint.lab_guide will be created + resource "apstra_datacenter_blueprint" "lab_guide" { + access_switch_count = (known after apply) + build_errors_count = (known after apply) + build_warnings_count = (known after apply) + external_router_count = (known after apply) + generic_system_count = (known after apply) + has_uncommitted_changes = (known after apply) + id = (known after apply) + leaf_switch_count = (known after apply) + name = "apstra-pod1" + spine_count = (known after apply) + status = (known after apply) + superspine_count = (known after apply) + template_id = "283b23e1-feb5-4f09-8f93-5a2f716ed1ea" + version = (known after apply) } # apstra_datacenter_device_allocation.lab_guide["apstra_esi_001_leaf1"] will be created + resource "apstra_datacenter_device_allocation" "lab_guide" { + blueprint_id = (known after apply) + deploy_mode = "deploy" + device_key = "52540026762C" + device_profile_node_id = (known after apply) + interface_map_id = "Juniper_vEX__slicer-7x10-1" + node_id = (known after apply) + node_name = "apstra_esi_001_leaf1" } # apstra_datacenter_device_allocation.lab_guide["apstra_esi_001_leaf2"] will be created + resource "apstra_datacenter_device_allocation" "lab_guide" { + blueprint_id = (known after apply) + deploy_mode = "deploy" + device_key = "525400EE2789" + device_profile_node_id = (known after apply) + interface_map_id = "Juniper_vEX__slicer-7x10-1" + node_id = (known after apply) + node_name = "apstra_esi_001_leaf2" } # apstra_datacenter_device_allocation.lab_guide["apstra_single_001_leaf1"] will be created + resource "apstra_datacenter_device_allocation" "lab_guide" { + blueprint_id = (known after apply) + deploy_mode = "deploy" + device_key = "525400A4D916" + device_profile_node_id = (known after apply) + interface_map_id = "Juniper_vEX__slicer-7x10-1" + node_id = (known after apply) + node_name = "apstra_single_001_leaf1" } # apstra_datacenter_device_allocation.lab_guide["spine1"] will be created + resource "apstra_datacenter_device_allocation" "lab_guide" { + blueprint_id = (known after apply) + deploy_mode = "deploy" + device_key = "5254000A5894" + device_profile_node_id = (known after apply) + interface_map_id = "Juniper_vEX__slicer-7x10-1" + node_id = (known after apply) + node_name = "spine1" } # apstra_datacenter_device_allocation.lab_guide["spine2"] will be created + resource "apstra_datacenter_device_allocation" "lab_guide" { + blueprint_id = (known after apply) + deploy_mode = "deploy" + device_key = "5254001CC314" + device_profile_node_id = (known after apply) + interface_map_id = "Juniper_vEX__slicer-7x10-1" + node_id = (known after apply) + node_name = "spine2" } # apstra_datacenter_resource_pool_allocation.lab_guide_asn["leaf_asns"] will be created + resource "apstra_datacenter_resource_pool_allocation" "lab_guide_asn" { + blueprint_id = (known after apply) + pool_ids = [ + "81c425e5-687d-41cb-9db4-66b5b2c85463", ] + role = "leaf_asns" } # apstra_datacenter_resource_pool_allocation.lab_guide_asn["spine_asns"] will be created + resource "apstra_datacenter_resource_pool_allocation" "lab_guide_asn" { + blueprint_id = (known after apply) + pool_ids = [ + "81c425e5-687d-41cb-9db4-66b5b2c85463", ] + role = "spine_asns" } # apstra_datacenter_resource_pool_allocation.lab_guide_ipv4["leaf_loopback_ips"] will be created + resource "apstra_datacenter_resource_pool_allocation" "lab_guide_ipv4" { + blueprint_id = (known after apply) + pool_ids = [ + "3a4c81a3-cb30-4d93-bc51-cedce7f18e98", ] + role = "leaf_loopback_ips" } # apstra_datacenter_resource_pool_allocation.lab_guide_ipv4["spine_leaf_link_ips"] will be created + resource "apstra_datacenter_resource_pool_allocation" "lab_guide_ipv4" { + blueprint_id = (known after apply) + pool_ids = [ + "3a4c81a3-cb30-4d93-bc51-cedce7f18e98", ] + role = "spine_leaf_link_ips" } # apstra_datacenter_resource_pool_allocation.lab_guide_ipv4["spine_loopback_ips"] will be created + resource "apstra_datacenter_resource_pool_allocation" "lab_guide_ipv4" { + blueprint_id = (known after apply) + pool_ids = [ + "3a4c81a3-cb30-4d93-bc51-cedce7f18e98", ] + role = "spine_loopback_ips" } Plan: 12 to add, 0 to change, 0 to destroy. provider_installation { dev_overrides { "example.com/apstrktr/apstra" = "/Users/bwester/golang/bin" } direct {} Do you want to perform these actions? Terraform will perform the actions described above. Only 'yes' will be accepted to approve. Enter a value: yes apstra_datacenter_blueprint.lab_guide: Creating... apstra_datacenter_blueprint.lab_guide: Creation complete after 5s [id=d0ff756f-d76e-401b-9b98-ba023b9a2d9d] apstra_datacenter_device_allocation.lab_guide["spine1"]: Creating... apstra_datacenter_resource_pool_allocation.lab_guide_ipv4["spine_loopback_ips"]: Creating... apstra_datacenter_resource_pool_allocation.lab_guide_asn["leaf_asns"]: Creating... apstra_datacenter_resource_pool_allocation.lab_guide_ipv4["leaf_loopback_ips"]: Creating... apstra_datacenter_device_allocation.lab_guide["apstra_single_001_leaf1"]: Creating... apstra_datacenter_device_allocation.lab_guide["apstra_esi_001_leaf2"]: Creating... apstra_datacenter_device_allocation.lab_guide["apstra_esi_001_leaf1"]: Creating... apstra_datacenter_resource_pool_allocation.lab_guide_asn["spine_asns"]: Creating... apstra_datacenter_device_allocation.lab_guide["spine2"]: Creating... apstra_datacenter_resource_pool_allocation.lab_guide_ipv4["spine_leaf_link_ips"]: Creating... apstra_datacenter_resource_pool_allocation.lab_guide_ipv4["leaf_loopback_ips"]: Creation complete after 3s apstra_datacenter_resource_pool_allocation.lab_guide_asn["spine_asns"]: Creation complete after 4s apstra_datacenter_resource_pool_allocation.lab_guide_asn["leaf_asns"]: Creation complete after 4s apstra_datacenter_resource_pool_allocation.lab_guide_ipv4["spine_loopback_ips"]: Creation complete after 5s apstra_datacenter_resource_pool_allocation.lab_guide_ipv4["spine_leaf_link_ips"]: Creation complete after 5s apstra_datacenter_device_allocation.lab_guide["spine1"]: Still creating... [10s elapsed] apstra_datacenter_device_allocation.lab_guide["apstra_esi_001_leaf1"]: Still creating... [10s elapsed] apstra_datacenter_device_allocation.lab_guide["apstra_esi_001_leaf2"]: Still creating... [10s elapsed] apstra_datacenter_device_allocation.lab_guide["apstra_single_001_leaf1"]: Still creating... [10s elapsed] apstra_datacenter_device_allocation.lab_guide["spine2"]: Still creating... [10s elapsed] apstra_datacenter_device_allocation.lab_guide["spine1"]: Creation complete after 11s apstra_datacenter_device_allocation.lab_guide["apstra_single_001_leaf1"]: Creation complete after 13s apstra_datacenter_device_allocation.lab_guide["apstra_esi_001_leaf2"]: Creation complete after 13s apstra_datacenter_device_allocation.lab_guide["spine2"]: Creation complete after 14s apstra_datacenter_device_allocation.lab_guide["apstra_esi_001_leaf1"]: Creation complete after 14s apstra_blueprint_deployment.lab_guide: Creating... apstra_blueprint_deployment.lab_guide: Creation complete after 3s Apply complete! Resources: 12 added, 0 changed, 0 destroyed. -

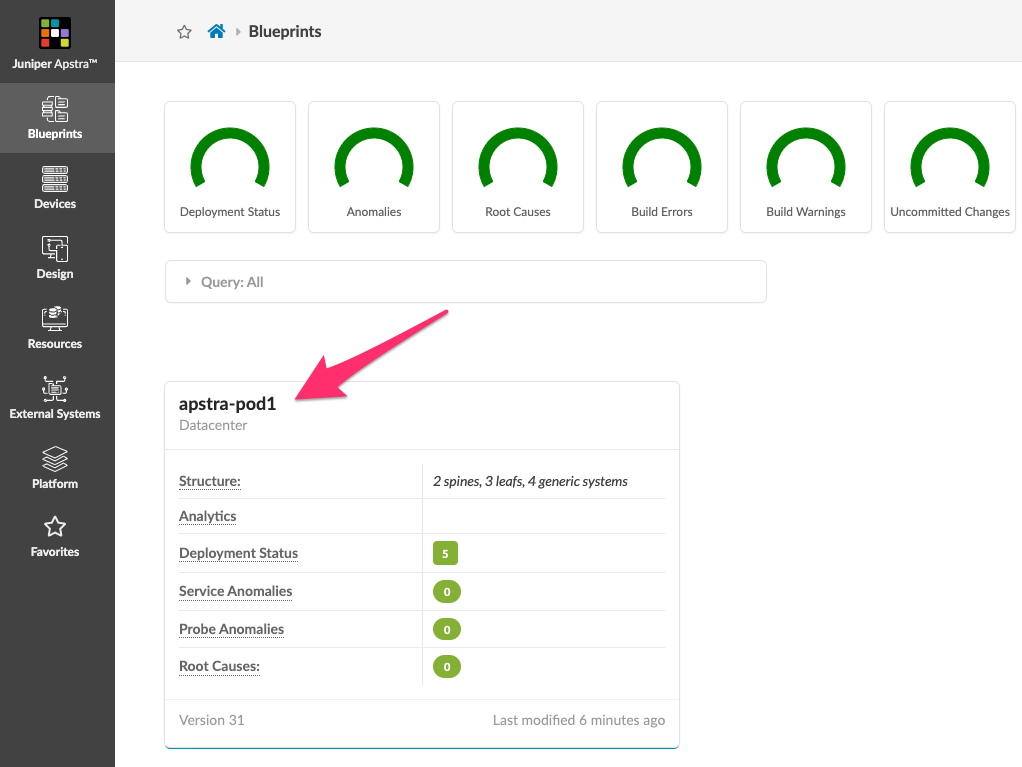

Navigate to Blueprints and you should now see your created blueprint: